Think back to how much text messaging changed our form of communication. Soon after touch screens fully revolutionized the way UI was designed and developed for interaction on mobile devices. We are now looking at the future of how we interact with technology.

Looking at the first video for Project Soli , was both impressive and exciting. The Soli Sensor is a tiny radar chip created by Google that uses sensor technology to track touchless interactions on a sub-millimeter level. Google plans to scale the chip down small enough to fit inside wearable devices. The goal is to remove actual touch gestures on a small screen associated with wearable and other devices from the equation; replacing them with fine gesture recognition.

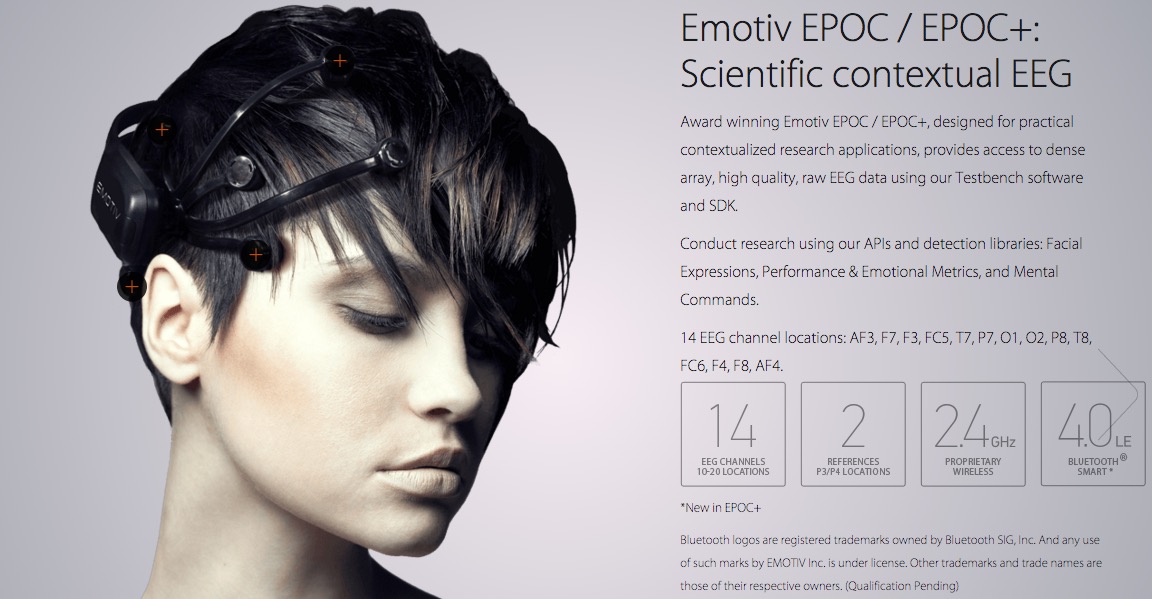

Even more fascinating Emotiv ’s EPOC / EPOC+ a revolutionary wearable technology that uses electroencephalography (EEG) to read and record your brain waves and converts it into usable data. “You think, therefore you can” is the concept behind EPOC. This is a whole different level of interaction that is capable of gathering research data using Emotiv’s APIs and libraries that can detect facial expressions, performance and emotional metrics, as well as mental commands.

Both these technologies are not “a look into the future” type of product. They currently exist!

As a someone who is both creative and scientific this is fascinating because both products do not use any current forms of UI. If devices do not require you touch interaction or data input from you because they can simply read you mind or register your gestures, where does that leave traditional touch screen apps? or screens? Could User Telepathy replace User Interaction?

The post Is the Future of Tech Making UI Irrelevant? appeared first on webdesignledger